Who works with Siebel Application knows that sometimes the high memory consumption in application servers may cause Object Managers to crash. It usually happens when the process reaches the memory limit of the 32-bit process (˜2 GB depending on the OS). For such behavior we call it Memory Leak and it can be caused for several reasons, such as unsupported hardware, misconfiguration, non-certified third-party softwares or scripts creating objects and not destroying them properly.

Regarding the last item, when objects are not being destroyed, I created a script that analyzes eScript codes from the Siebel Repository and generates a list of variables that should be reviewed and fixed for the proper destruction and, to help other Siebel maintainers to review it’s own repository, I turned it public through a web application.

Want to check this out? Easy! Check the MemLeak Tool page and follow the instructions described in the page (basically you will need to export eScript code, upload it as .csv and then you will download the result file with the list of variables that need to be reviewed). Let me know how it goes 🙂

Regarding the architecture and implementation of the MemLeak Tool Web Page, I decided to explore AWS Serverless services to host it. Also, I chose SAM (Serverless Application Model) to deploy the services programatically (Infra as Code).

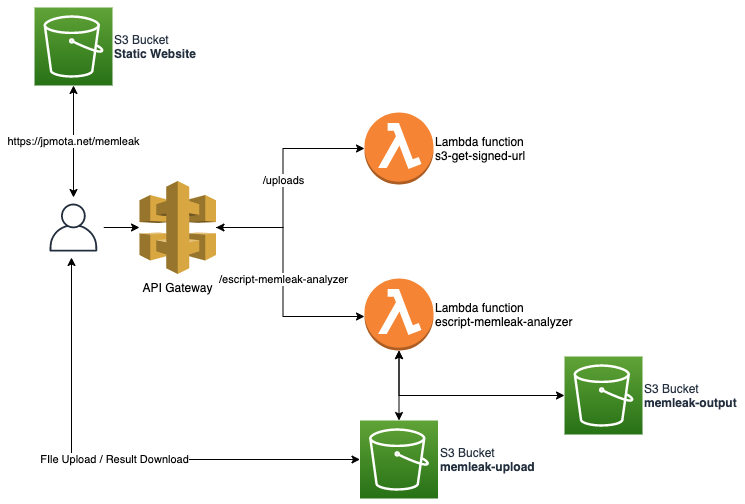

Below is a high-level diagram of the solution:

The static part of the website is hosted in Amazon S3 and it’s just a simple VueJs frontend application with the API Gateway URL.

The first part of the process is when the user uploads the csv file with the scripts for review. For such, the front end will first invoke the s3-get-signed-url Lambda function to get a pre-signed URL which will allow the upload.

Once the file is uploaded, the frontend will then invoke another Lambda function, the escript-memleak-analyzer. This function will download the file from the S3 Bucket to it’s local /tmp folder, run the analysis and create in the the output file in the same tmp folder. It will then upload the output file to a different S3 Bucket (memleak-output), request a pre-signed URL to send it back to the front-end, which will allow the user to download the file.

To allow the backend to be easily recreated and, as I decided to use only serverless services, I am using the following SAM template to deploy it in AWS:

AWSTemplateFormatVersion: 2010-09-09

Transform: AWS::Serverless-2016-10-31

Description: Siebel eScript Memory Leak Analyzer - Serverless application

Resources:

## S3 buckets

S3UploadBucket:

Type: AWS::S3::Bucket

Properties:

BucketName: !Ref UploadBucketName

CorsConfiguration:

CorsRules:

- AllowedHeaders:

- "*"

AllowedMethods:

- GET

- PUT

- HEAD

AllowedOrigins:

- "*"

S3OutputBucket:

Type: AWS::S3::Bucket

Properties:

BucketName: memleak-output

CorsConfiguration:

CorsRules:

- AllowedHeaders:

- "*"

AllowedMethods:

- GET

- PUT

- HEAD

AllowedOrigins:

- "*"

# HTTP API

MyApi:

Type: AWS::Serverless::HttpApi

Properties:

CorsConfiguration:

AllowMethods:

- GET

- POST

- DELETE

- OPTIONS

AllowHeaders:

- "*"

AllowOrigins:

- "https://jpmota.net/"

MaxAge: 3600

## Lambda functions

UploadRequestFunction:

Type: AWS::Serverless::Function

Properties:

FunctionName: s3-get-signed-url

CodeUri: getSignedURL/

Handler: app.handler

Runtime: nodejs12.x

Timeout: 3

MemorySize: 128

Environment:

Variables:

UploadBucket: !Ref UploadBucketName

Policies:

- S3WritePolicy:

BucketName: !Ref UploadBucketName

Events:

UploadAssetAPI:

Type: HttpApi

Properties:

Path: /uploads

Method: get

ApiId: !Ref MyApi

## Lambda functions

MemLeakAnalyzer:

Type: AWS::Serverless::Function

Properties:

FunctionName: escript-memleak-analyzer

CodeUri: MemLeakAnalyzer/

Handler: memleak_lambda.lambda_handler

Runtime: python3.8

Timeout: 90

MemorySize: 128

Environment:

Variables:

UploadBucket: memleak-upload

Policies:

- AWSLambdaBasicExecutionRole

- S3ReadPolicy:

BucketName: !Ref UploadBucketName

- S3FullAccessPolicy:

BucketName: !Ref S3OutputBucket

Events:

UploadAssetAPI:

Type: HttpApi

Properties:

Path: /escript-memleak-analyzer

Method: POST

ApiId: !Ref MyApi

## Parameters

Parameters:

UploadBucketName:

Default: memleak-upload

Type: String

## Replace API Endpoint URL in the frontend application

Outputs:

APIendpoint:

Description: "HTTP API endpoint URL - Update frontend html"

Value: !Sub "https://${MyApi}.execute-api.${AWS::Region}.amazonaws.com"

S3UploadBucketName:

Description: "S3 bucket for application uploads"

Value: !Ref UploadBucketName

For the frontend repository, I later decided that, instead of hosting the html file in AWS S3, I would host it in a shared hosting server that I already own. Just to save some money on Route 53 🙂

To automate the deployment of the frontend files, I am using Github actions, that will trigger a FTP copy everytime I push an updated code to the repository. The “FTP Deploy” github action can be easily found in the Marketplace, but I will paste here the .yaml code that I’m using:

on: push

name: 🚀 Deploy website on push

jobs:

web-deploy:

name: 🎉 Deploy

runs-on: ubuntu-latest

steps:

- name: 🚚 Get latest code

uses: actions/checkout@v2

- name: 📂 Sync files

uses: SamKirkland/FTP-Deploy-Action@4.0.0

with:

server: ${{ secrets.ftp_server }}

username: ${{ secrets.ftp_user }}

password: ${{ secrets.ftp_password }}

server-dir: ${{ secrets.ftp_dir }}The links below will take you to the Github repositories:

2 responses to “Deploying Siebel eScript Memory Leak analyzer in AWS using serverless”

Hi, I tried to utilize your Siebel Memory Leak Analyzer tool (https://jpmota.net/memleak/). However, it throws errors after submitting csv file for analysis.

Please wait while the file is being processed…

An error has occurred. If the input file seems ok, please reach out to me on Twitter at @jpmmota.

Can you please suggest if there is an updated version

Hi Sahil, could you please check if the file is in UTF-8 format and it is not truncated? Regards!